Anna (Anya) Ivanova

Assistant Professor

Georgia Institute of Technology

Hi!

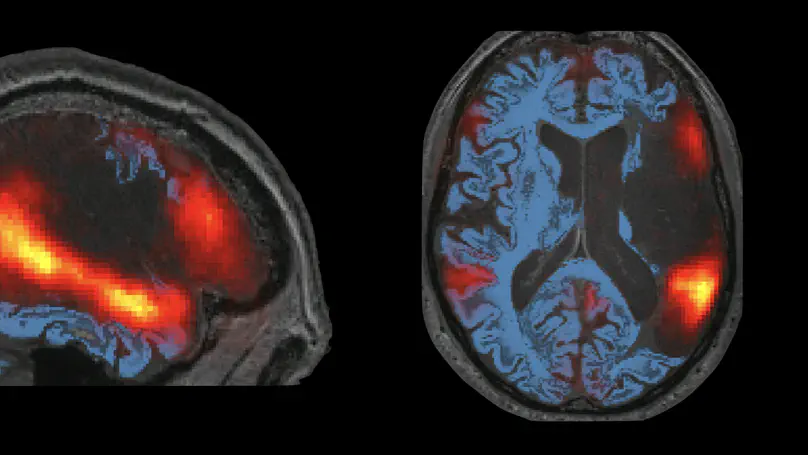

I am an Assistant Professor of Psychology at Georgia Institute of Technology, interested in studying the relationship between language and other aspects of human cognition. In my work, I use tools from cognitive neuroscience (such as fMRI) and artificial intelligence (such as large language models).

To learn more, browse my lab’s website (Language, Intelligence, and Thought) or check out this 5-min TEDx talk about applying insights from neuroscience to better understand the capabilities of large language models.

You can contact me at a.ivanova [at] gatech [dot] edu or follow me on Twitter.

- Neuroscience of language and cognition

- The link between language and world knowledge in humans and in AI models

- Inner speech

PhD in Brain & Cognitive Sciences, 2022

Massachusetts Institute of Technology

BS in Neuroscience & Computer Science, 2017

University of Miami

Position

- Head of the Language, Intelligence, and Thought (LIT) lab

- Leveraging experimental tools to jointly analyze biological and artificial intelligence

- Developing a large-scale benchmark to evaluate world knowledge in language models

- Designing a platform to enable all researchers to study world knowledge in machines using custom tests / models

Featured Publications

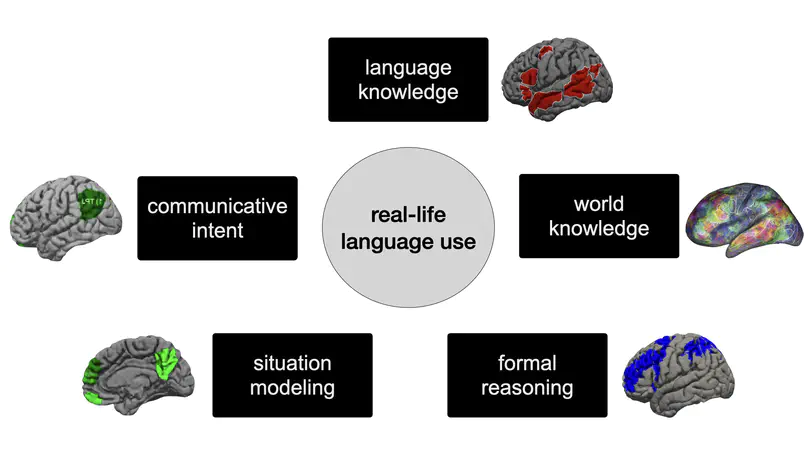

Large language models (LLMs) have come closest among all models to date to mastering human language, yet opinions about their capabilities remain split. Here, we evaluate LLMs using a distinction between formal competence — knowledge of linguistic rules and patterns — and functional competence — understanding and using language in the world. We ground this distinction in human neuroscience, showing that these skills recruit different cognitive mechanisms. Although LLMs are close to mastering formal competence, they still fail at functional competence tasks, which often require drawing on non-linguistic capacities. In short, LLMs are good models of language but incomplete models of human thought.

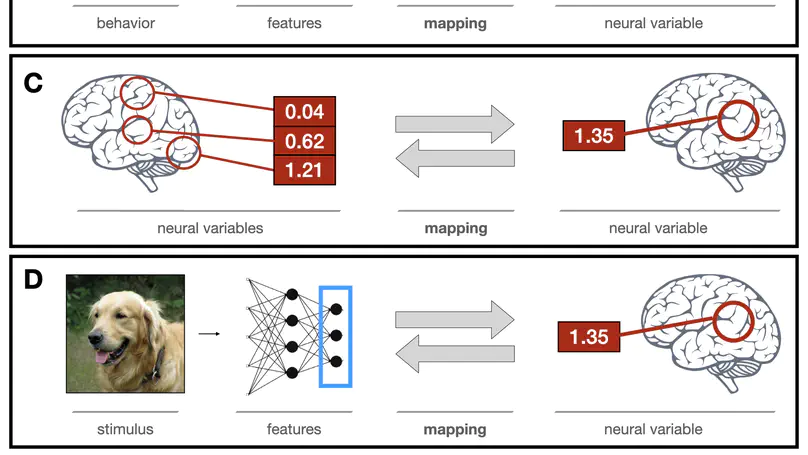

Many cognitive neuroscience studies use large feature sets to predict and interpret brain activity patterns. Of crucial importance in all these studies is the mapping model, which defines the space of possible relationships between features and neural data.

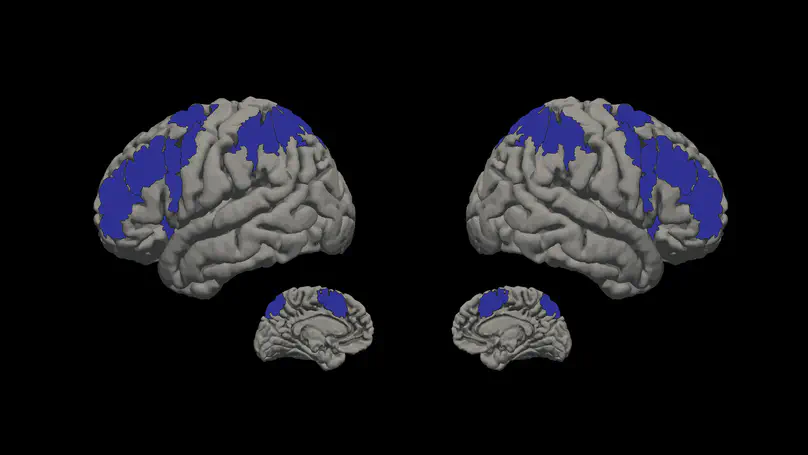

The ability to combine individual concepts of objects, properties, and actions into complex representations of the world is often associated with language. Yet combinatorial event-level representations can also be constructed from nonverbal input, such as visual scenes. Here, we test whether the language network in the human brain is involved in and necessary for semantic processing of events presented nonverbally.